Filter by research area

5 ways to mitigate biological sample integrity loss

Biological research hinges on the collection and preservation of often precious samples for future analysis. Preserving sample integrity over time is crucial to avoiding degradation. Loss of integrity can lead to data loss or unreliable results,...

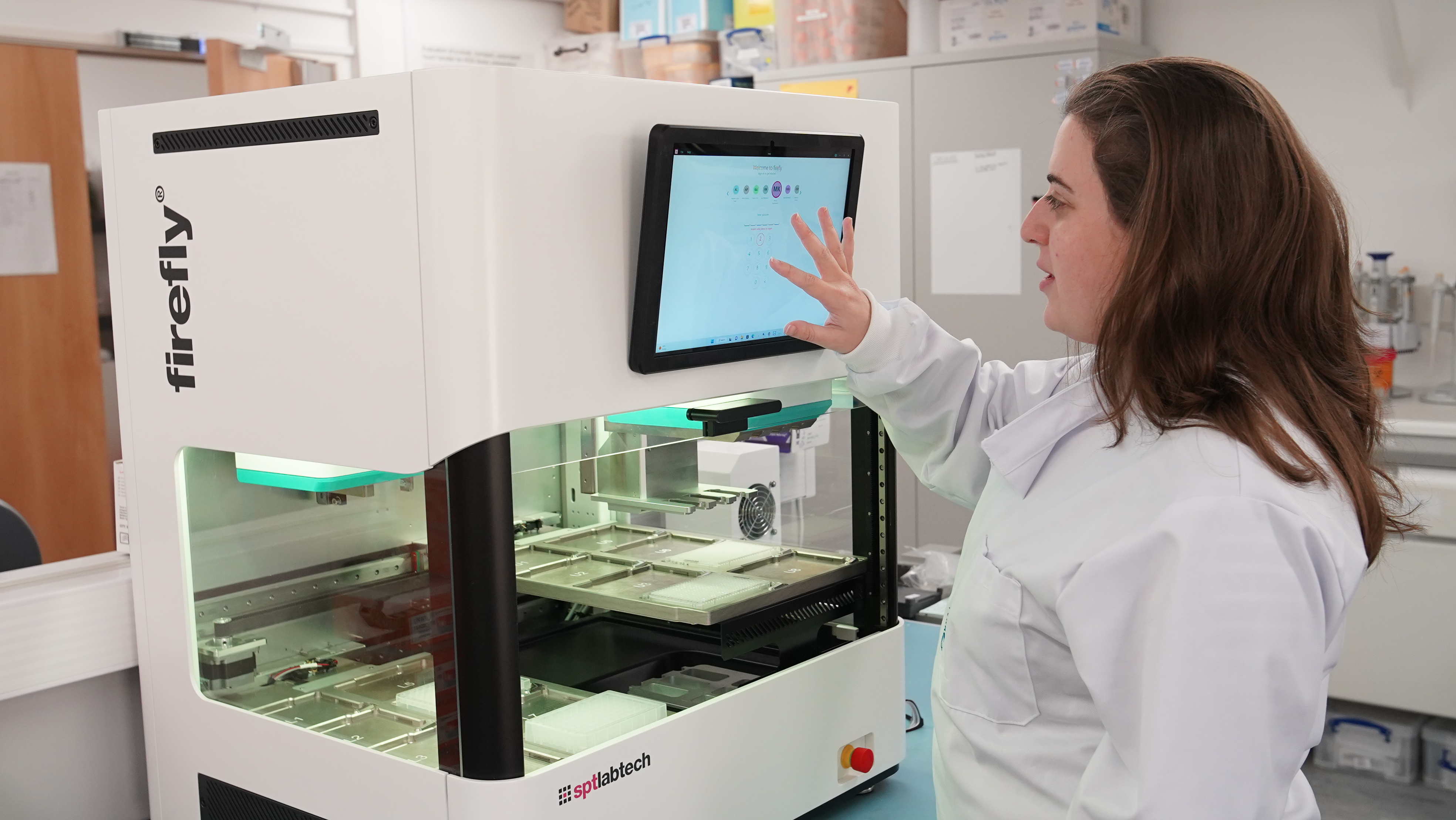

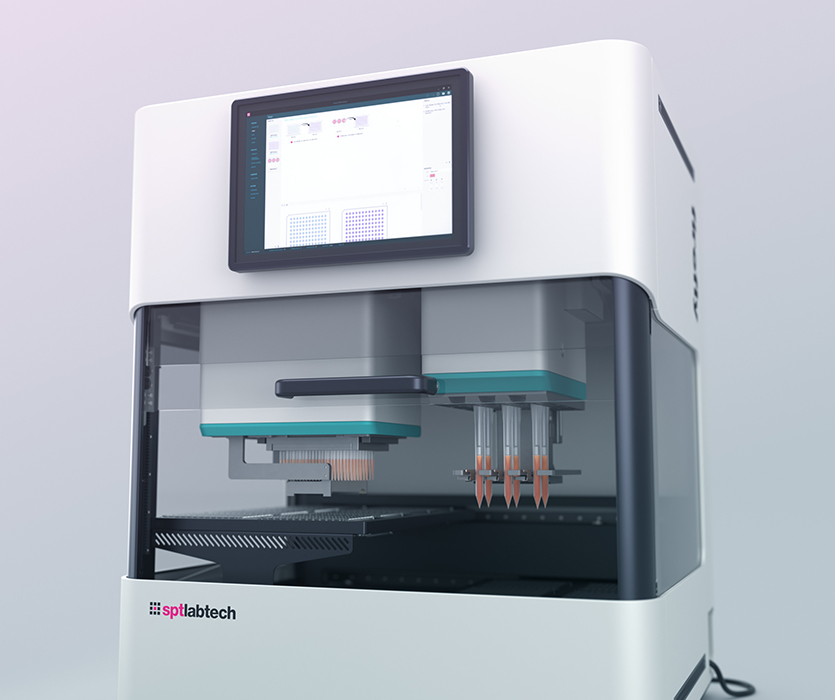

Elevating liquid handling with intuitive software

At SPT Labtech, our commitment to innovation extends beyond hardware; our software plays a crucial role in making automation accessible for all. Michelle Barnett, a Software Engineer on the firefly® team, shares her insights on the significance of...

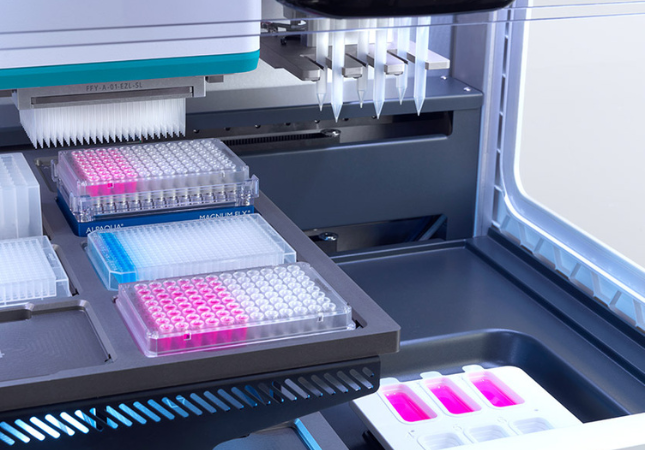

Streamlining Science: How does Lab Automation Drive Efficiency?

In today’s scientific landscape, labs are under immense pressure to reduce costs, shorten timelines, and maximize output, often from limited resources. Lab automation has emerged as an incredibly valuable tool to address these challenges, empowering...

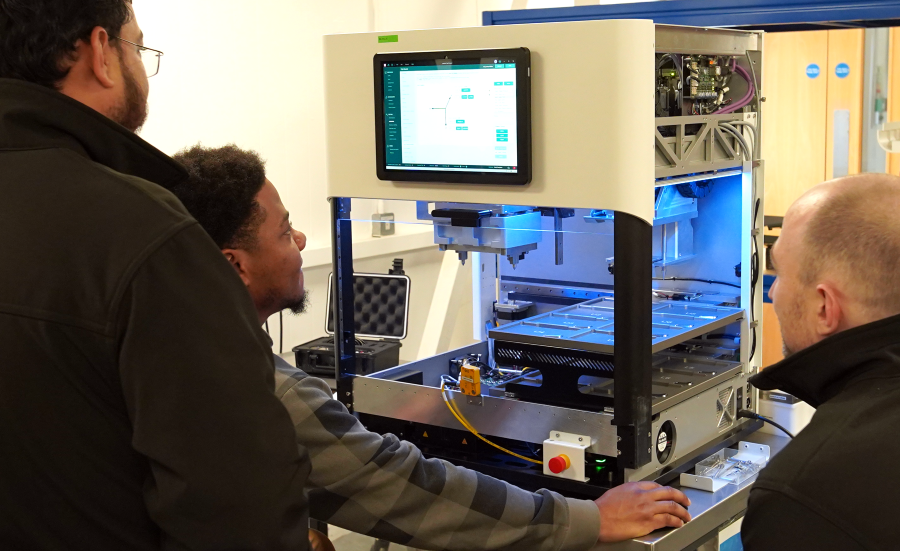

Illuminating Innovation: What Does it Take to Become a firefly® Engineer?

Every one of our engineers playsa pivotal role in delivering the highest standards for our firefly customers. Laboratories globally rely on firefly for its liquid handling precision. Our commitment to customers goes beyond just functionality; it's...

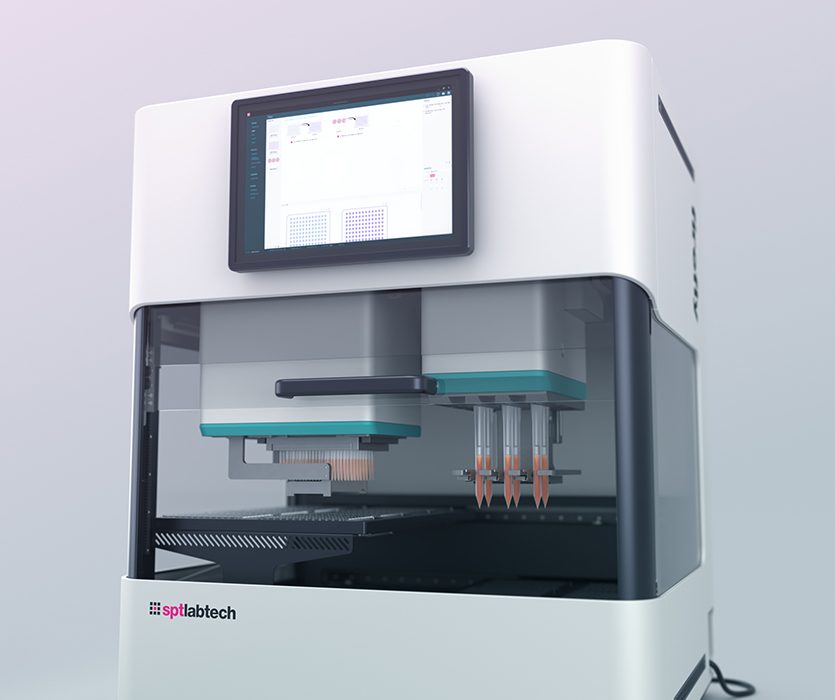

Introducing the latest features and enhancements to firefly® software

Despite its compact benchtop footprint, firefly packs a huge amount of functionality, featuring capabilities for pipetting, dispensing, shaking and incubation. Throughout its development, our commitment has been to enhance accessibility and...

SPT Labtech 2023 Year in Review

As we bid farewell to a remarkable year, we at SPT Labtech take a moment to reflect on the extraordinary strides we've made in 2023. It's been a year of innovation, collaboration, and advancement, marking significant milestones in our journey...

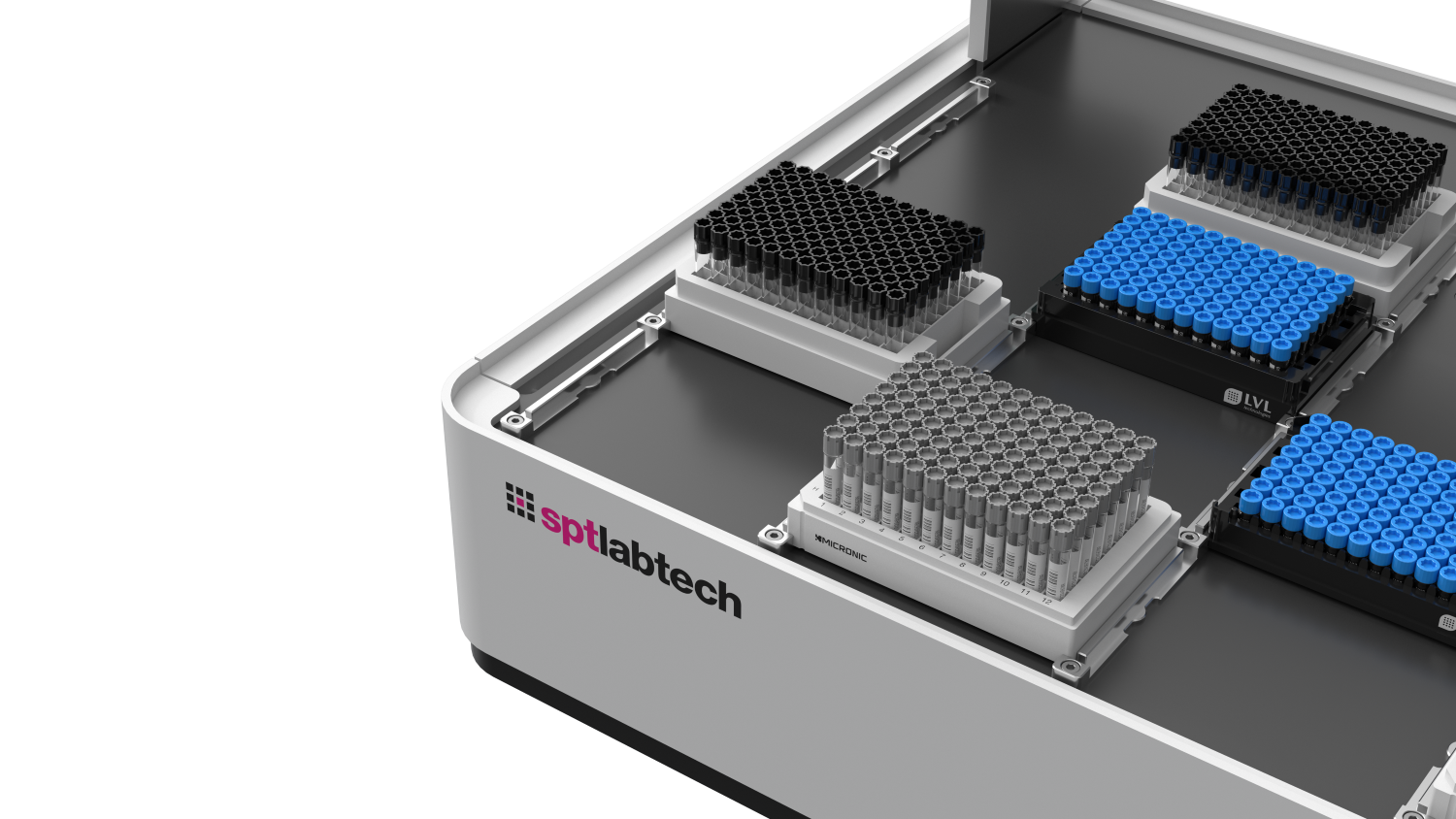

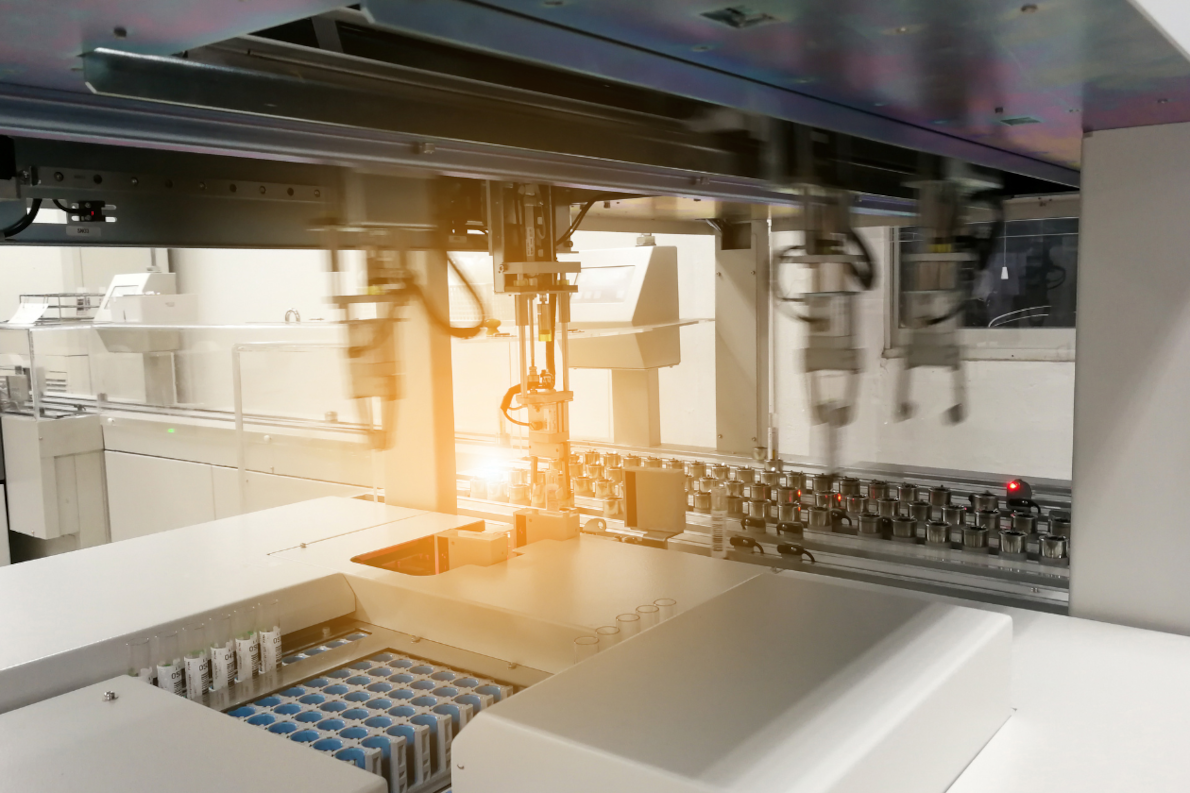

Build a tube handling system that works for you in 2 easy steps

Recognizing that laboratories have diverse requirements, we believe in abandoning a one-size-fits-all approach when it comes to tube handling. As an integral part of your research, tube handling systems must be able to address your specific needs to...

The Cutting-Edge Advantages of Sample Management and the Future Landscape

In the realm of scientific research and diagnostics, effective sample management has long been a critical concern. It serves as the bedrock upon which high-quality data and reliable outcomes are built. Consequently, an advanced and automated system...

.jpg)

.jpg)